MMoE - Multi-gate Mixture-of-Experts KDD2018

模型结构

论文观点

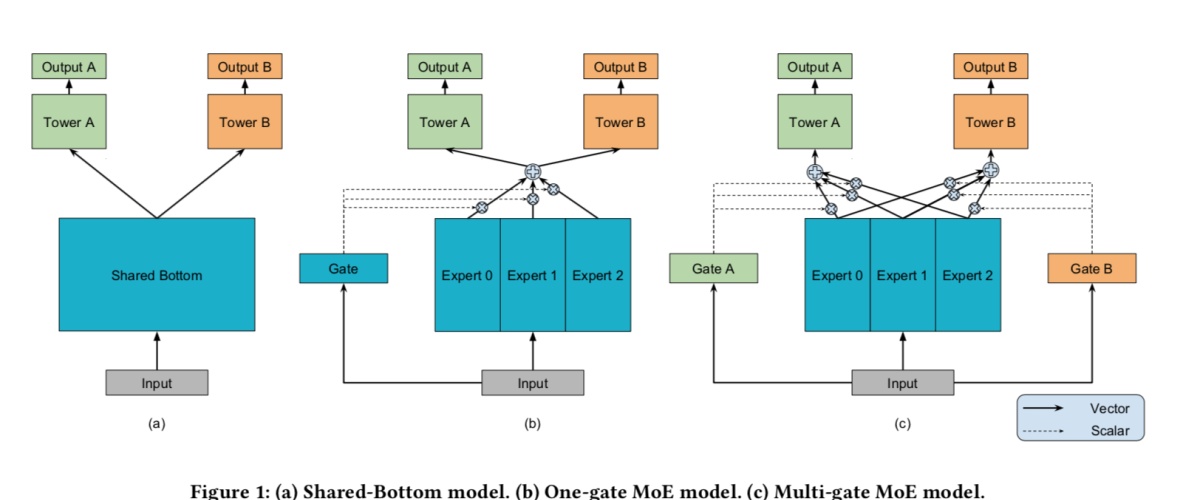

- the Mixture-of- Experts (MoE) structure to multi-task learning by sharing the expert submodels across all tasks

- explicitly models the task relationships and learns task-specific functionalities to leverage shared representations.

- modulation and gating mechanisms can improve the trainability in training non-convex deep neural networks

结构简介

- expert network可认为是一个神经网络

- g是组合experts结果的gating network,具体来说g产生n个experts上的概率分布,最终的输出是所有experts的带权加和。

参考

- https://zhuanlan.zhihu.com/p/55752344?edition=yidianzixun&utm_source=yidianzixun&yidian_docid=0LC8kTgk